Inferless Review - Complete Directory Informations

Basic Information

Tool Name: Inferless

Category: Machine Learning Deployment, Serverless GPU Inference, AI Infrastructure

Type: Web App, CLI, API

Official Website: https://www.inferless.com/

Developer/Company: Inferless Inc.

Launch Date: 2023 (Founded)

Last Updated: August 20, 2024 (New UI Launch)

Quick Overview

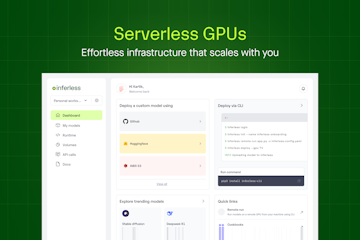

One-line Description: Deploy and scale machine learning models on serverless GPUs with ultra-low cold starts.

What it does: Inferless provides a serverless GPU inference platform that allows developers and businesses to effortlessly deploy and scale machine learning (ML) models. It focuses on significantly reducing cold start times and simplifying infrastructure management, enabling users to pay only for the GPU resources they consume. The platform offers features like automated scaling, custom runtimes, and continuous integration/continuous deployment (CI/CD) to streamline the ML model deployment process.

Best for: Developers, data scientists, startups, and technology companies seeking to deploy ML models quickly, cost-efficiently, and at scale without the complexities of managing traditional GPU infrastructure. It is particularly well-suited for applications with "spiky and unpredictable workloads" and those requiring low latency.

Key Features

- Effortless Deployment: Deploy machine learning models in minutes using a web-based UI, CLI, or remote execution capabilities, supporting models from Hugging Face, Git, Docker, AWS S3, and Google Cloud Buckets.

- Dynamic Autoscaling: Automatically scales from zero to hundreds of GPUs based on demand, handling unpredictable workloads efficiently with an in-house load balancer to minimize overhead and avoid idle costs.

- Lightning-Fast Cold Starts: Optimizes model loading to achieve sub-second response times, even for large models, by using high IOPS storage close to GPUs, drastically reducing typical cold start latencies.

- Custom Runtimes: Allows users to customize the container environment with specific software and dependencies required for their models.

- Persistent Volumes: Provides NFS-like writable volumes that support simultaneous connections to various model replicas for data management.

- Automated CI/CD: Enables automatic rebuilding and redeployment of models upon repository updates, eliminating the need for manual re-imports and ensuring the latest model versions are always in use.

- Advanced Monitoring: Offers detailed call and build logs, along with real-time metrics (total calls, cold start times, GPU usage), to monitor and refine models efficiently.

- Dynamic Batching: Increases throughput by combining multiple server-side requests, optimizing GPU utilization and performance under varying loads.

- Private Endpoints: Allows customization of endpoint settings such as scale-down, timeout, and concurrency, offering fine-grained control over model deployment.

Pricing Structure

Free Plan:

- $30 Free Credit to start.

- Free 50GB/month storage.

- Pay-per-second usage for GPU inference.

Paid Plans: Inferless primarily operates on a pay-as-you-go model, where costs are determined by the GPU type and duration of use. There isn't a fixed monthly subscription in the traditional sense, but features scale with usage.

- Usage-Based Billing: Charged per second for GPU resources consumed, with different rates for various NVIDIA GPU types (T4, A10, A100).

- Volume Pricing: Additional storage beyond 50GB/month is charged at $0.3/GB/month.

Enterprise: Contact for custom pricing and advanced features. Features typically include higher GPU concurrency (e.g., up to 50), longer log retention (365 days), and dedicated support via private Slack connect and a support engineer.

Free Trial: $30 free credit to test the platform.

Money-back Guarantee: Fees are generally non-cancelable and non-refundable, except if Inferless breaches its obligations under the agreement, in which case a prorated refund of prepaid fees may be available upon user account termination.

Pricing Plans Explained

Free Tier (with $30 Credit)

What you get: Access to the Inferless platform to deploy machine learning models using serverless GPUs. You receive an initial $30 credit to cover your usage costs and 50GB of free storage per month.

Perfect for: Individuals, students, researchers, and small teams looking to experiment with serverless ML deployment, test model performance, and evaluate the platform's capabilities without an upfront financial commitment.

Limitations: Usage is limited by the $30 credit and 50GB storage. Once credits are consumed, regular pay-as-you-go rates apply. Support is likely community-based or through general channels.

Technical terms explained:

- Serverless GPU: This means you don't need to set up or manage physical or virtual GPU servers. Inferless handles all the underlying infrastructure, allowing you to deploy your models without worrying about provisioning hardware or maintaining it. You only pay for the computational power (GPU usage) when your models are actively processing requests.

- Cold Start: This refers to the delay experienced when a machine learning model, particularly a large one, is first loaded and initialized to serve a request after a period of inactivity. Inferless significantly reduces this delay, ensuring faster response times.

Usage-Based (Standard Pay-as-you-go)

What you get: Full access to Inferless's serverless GPU inference platform, where you pay per second for the GPU resources your models consume. You can choose from various NVIDIA GPU types (T4, A10, A100) with different specifications and corresponding per-second/per-hour rates. Storage beyond 50GB/month is also billed. This tier provides unlimited deployed webhook endpoints, GPU concurrency (e.g., up to 5-10 max replicas, depending on resource availability), and 15 days of log retention.

Perfect for: Fast-growing startups, independent developers, and small to medium businesses needing flexible, scalable, and cost-efficient ML model deployment for production workloads. It's ideal for those with fluctuating demand who want to avoid fixed infrastructure costs.

Key upgrades from free: Continuous usage beyond the initial $30 credit, access to a wider range of GPU types for more demanding models, and features suitable for production environments. Support may include private Slack connect within 48 working hours.

Technical terms explained:

- GPU RAM: Graphics Processing Unit Random Access Memory. This is the memory available directly to the GPU for processing data. More GPU RAM allows for larger and more complex models to run efficiently.

- vCPUs: Virtual Central Processing Units. These are the virtualized processing cores that support the GPU and handle general computing tasks, such as data pre-processing and post-processing, alongside the GPU's specialized tasks.

- RAM: Random Access Memory. This is the main system memory available to the instance running your model, separate from GPU RAM.

- Concurrency: This refers to the number of requests or model instances that can be processed simultaneously. Higher concurrency means the platform can handle more incoming requests at the same time.

- Log Retention: The period for which your operational logs (call logs, build logs) are stored by Inferless, allowing for debugging and performance analysis.

Enterprise Plan

What you get: All features of the usage-based tier, with enhanced capabilities tailored for large organizations. This typically includes much higher GPU concurrency (e.g., up to 50 max replicas), extended log retention (365 days), and dedicated premium support through a private Slack connect channel and a dedicated support engineer. Pricing is custom and usually involves direct contact for a quote.

Perfect for: Large enterprises, organizations with very high-volume, mission-critical AI applications, or those with specific compliance, security, and support requirements.

Key upgrades: Significantly higher scaling limits, extended data retention for compliance and in-depth analysis, and personalized, rapid-response customer support.

Technical terms explained:

- GPU Concurrency (Enterprise): For enterprise-level needs, Inferless offers even greater flexibility, allowing for a higher number of simultaneous model instances or requests (e.g., up to 50 replicas) to handle massive inference loads.

- Dedicated Support Engineer: A specialized support professional assigned to your account to provide personalized assistance, troubleshooting, and optimization guidance, ensuring smooth operations for critical workloads.

Pros & Cons

| The Good Stuff (Pros) | The Not-So-Good Stuff (Cons) |

|---|---|

| ✅ Blazing-Fast Cold Starts: Significantly reduces model load times, ensuring low-latency inference. | ❌ Limited Fixed Plan Transparency: Detailed fixed-rate plans (beyond usage) are not prominently displayed, requiring users to rely on usage-based calculations. |

| ✅ Cost-Efficiency & Pay-per-use: Users only pay for active GPU usage, eliminating idle costs and potentially saving up to 80% on cloud bills. | ❌ Dependence on Service Provider: As a managed serverless platform, users relinquish some control over underlying infrastructure, which might not suit those with strict customization needs. |

| ✅ Seamless Autoscaling: Automatically scales GPU resources from zero to millions of requests with minimal overhead. | ❌ Data Export Formats: Specific data export formats for logs and metrics are not explicitly detailed. |

| ✅ User-Friendly Deployment (UI/CLI): Offers both an intuitive web interface and a powerful command-line interface for easy model deployment. | ❌ No Dedicated Desktop/Mobile Apps: Primarily a web-based and CLI tool; no mention of dedicated desktop or mobile applications. |

| ✅ Robust Integrations: Supports model import from popular sources like Hugging Face, Git, Docker, AWS S3, and Google Cloud Buckets. | ❌ Refund Policy: Fees are generally non-refundable unless Inferless breaches its obligations. |

| ✅ Strong Security & Compliance: SOC 2 Type 2, ISO 27001, and GDPR compliant, ensuring high data security and privacy standards. | |

| ✅ Automated CI/CD: Simplifies continuous deployment with automatic model updates from source repositories. | |

| ✅ Customization Options: Allows for custom runtimes, persistent volumes, and fine-grained control over endpoints. |

Use Cases & Examples

Primary Use Cases:

- Real-time AI Inference: Deploying trained machine learning models to provide immediate predictions in response to live data streams, crucial for applications requiring instant responses.

- LLM Chatbots & AI Agents: Hosting large language models for conversational AI applications, customer service chatbots, or AI agents that need to process and generate text rapidly.

- Computer Vision Applications: Running models for image recognition, object detection, or video analysis where fast inference on GPU is critical for processing visual data.

- Audio Generators & Processing: Deploying models for tasks like text-to-speech (TTS), voice cloning, or other audio synthesis and analysis, where GPU power is often required.

- Batch Processing of ML Workloads: Efficiently handling large volumes of data for inference in batches, scaling resources up and down as needed to process intermittent or heavy workloads cost-effectively.

Real-world Examples:

- A company like Cleanlab used Inferless to deploy their Trustworthy Language Model (TLM), saving almost 90% on GPU cloud bills and going live in under a day, particularly for latency-sensitive applications with unpredictable, spiky workloads.

- Spoofsense leveraged Inferless's dynamic batching feature to dramatically improve their performance at scale.

- A user deployed their custom embedding model for "Myreader" on Inferless GPU, processing hundreds of books daily with minimal cost, noting the ability to share a single GPU with multiple models and only paying for hours used.

Technical Specifications

Supported Platforms: Web (UI-based access), Command-Line Interface (CLI), API.

Browser Compatibility: Expected to be compatible with modern web browsers (e.g., Chrome, Firefox, Safari, Edge) for its web-based UI.

System Requirements: As a serverless cloud platform, local system requirements are minimal, primarily a web browser for the UI or a terminal for the CLI.

Integration Options: Hugging Face, Git (Custom Code), Docker, Cloud Buckets (AWS S3, Google Cloud Storage), File Import from System, Model Alerts using AWS SNS.

Data Export: Detailed call and build logs are available for monitoring, but specific data export formats (e.g., CSV, JSON for metrics) are not explicitly detailed.

Security Features: SOC 2 Type 2 certified, ISO 27001 certified, GDPR compliant, penetration tested, and undergoes regular vulnerability scans.

User Experience

Ease of Use: ⭐⭐⭐⭐⭐ (4.5 out of 5) - Users praise its "seamless" and "user-friendly deployment process," making it easy to integrate ML models.

Learning Curve: Beginner-friendly to Intermediate. The platform offers quick start guides and supports both UI and CLI deployment, indicating accessibility for different skill levels, though advanced customization might require some technical understanding.

Interface Design: Clean and modern, with a new UI launched in August 2024 designed to streamline workflows and boost productivity by simplifying model deployment into one page.

Mobile Experience: The web application may be accessible on mobile browsers, but Inferless does not offer a dedicated mobile app. A note on the website suggests switching to a larger screen for a more immersive and user-friendly experience.

Customer Support: Available via email (contact@inferless.com) and, for higher-tier users, through private Slack connect within 48 working hours. An AI assistant named "Socai" is also mentioned for instant guidance in the new UI.

Alternatives & Competitors

Direct Competitors:

- Cerebrium: A comprehensive ML development, deployment, and monitoring platform also offering serverless GPU inference with low cold starts and various GPU types. Cerebrium emphasizes developer experience and cost-predictable AI inference.

- ComfyOnline: A serverless platform specifically for running ComfyUI workflows, simplifying AI application development by managing dependencies and model downloads, enabling easy API generation and automatic scaling.

- DSensei: A serverless hosting platform focused on lightning-fast LLM model deployment, aiming for cost-effectiveness, flexibility, and quick start times without vendor lock-in.

When to choose this tool over alternatives: Inferless excels for organizations prioritizing ultra-low cold start times and a pure pay-per-second serverless model for GPU inference. Its strong emphasis on automated CI/CD, comprehensive monitoring, and robust security certifications (SOC 2, ISO 27001, GDPR) makes it a strong choice for businesses needing enterprise-grade reliability and compliance with predictable costs for spiky ML workloads.

Getting Started

Setup Time: Very fast, often within minutes to a day for production. Users have reported getting models into a staging environment in approximately 4 hours and production in about a day.

Onboarding Process: Self-guided with quick start guides, video walkthroughs, and documentation. Users can deploy models via UI or CLI.

Quick Start Steps:

- Create an Account: Sign up on the Inferless platform, possibly logging in with GitHub. You'll receive $30 in free credits.

- Choose Deployment Method: Decide between using the intuitive web UI or the powerful CLI.

- Import Your Model: Connect your model repository (Hugging Face, Git, Docker, AWS S3, GCP) or upload a file.

- Configure & Deploy: Select your desired GPU type (e.g., T4, A10, A100), configure settings like min/max replicas, inference timeout, and container concurrency, then deploy your model with a single click or command.

- Monitor & Optimize: Utilize the dashboard for real-time metrics and logs, and leverage features like dynamic batching and private endpoints for ongoing optimization.

User Reviews & Ratings

Overall Rating: ⭐⭐⭐⭐ (4.4 out of 5 stars) based on several user reviews.

Popular Review Sites:

- Product Hunt: Achieved #1 rank upon launch for its serverless inference offering.

- Inferless.com (user testimonials): Many users praise its efficiency and cost savings.

Common Praise:

- User-Friendly Deployment: The platform offers a smooth and easy process for integrating machine learning models.

- Low Cold Start Latency: Provides minimal cold start times, ensuring fast response rates even during fluctuating usage.

- Cost Efficiency: Offers serverless GPU resources, allowing users to pay only for what they use, leading to significant cost reductions (e.g., up to 90% savings).

- High Uptime: Boasts an uptime of 99.993%, indicating reliability and minimal downtime.

- Excellent Support: Users have specifically highlighted the "awesome" and "always available" support.

Common Complaints:

- Loss of Control: Using managed services means relinquishing some control over the infrastructure, which might not be ideal for organizations with very specific or strict customization needs.

- Dependence on Service Provider: Users are dependent on Inferless for infrastructure, which can pose challenges during service interruptions (though high uptime is reported).

Updates & Roadmap

Update Frequency: Frequent, with monthly newsletters detailing new features and enhancements.

Recent Major Updates:

- August 20, 2024: Launch of a new UI designed for streamlined deployment, resource management, and cost optimization, featuring clear visuals for GPU specs, pricing, and performance metrics, a new playground, and separated inference/build logs.

- June 2024: Introduction of streaming APIs with SSE, enhanced CLI commands, and model management APIs (Runtime Patch, Machine Settings Configuration, Fetch Model Logs), and Open-Source Nvidia Triton Copilot.

- February 26, 2024: Achieved SOC 2, ISO 27001, and GDPR compliance.

Upcoming Features: Information on upcoming features is generally shared through their monthly newsletters and blog posts.

Support & Resources

Documentation: Comprehensive documentation is available, including quick start guides, conceptual overviews, and detailed instructions for various integrations and CLI commands.

Video Tutorials: Available on their YouTube channel, including product walkthroughs and guides on deploying models and using specific features.

Community: While not explicitly mentioned as a forum or Discord, their newsletters suggest an active community.

Training Materials: Tutorials and guides are available on their website and blog, covering topics like reinforcement learning and choosing the right text-to-speech models.

API Documentation: API reference and model management APIs are available for programmatic control.

Frequently Asked Questions (FAQ)

General Questions

Q: Is Inferless free to use? A: Inferless operates on a pay-as-you-go model. New users receive a $30 free credit to get started and 50GB of free storage per month. After the credit is used, you pay only for the GPU resources you consume.

Q: How long does it take to set up Inferless? A: Inferless boasts rapid deployment. You can typically get a model into a staging environment in about 4 hours, and deploy to production within a day.

Q: Can I cancel my subscription anytime? A: Inferless uses a usage-based billing model. You can disable your model anytime to stop billing. Fees are generally non-refundable unless Inferless breaches its obligations, in which case a prorated refund may be available upon account termination.

Pricing & Plans

Q: What's the difference between Inferless's pricing and traditional cloud GPU providers? A: Inferless offers a true serverless, pay-per-second model, meaning you only pay when your models are actively processing requests. This avoids idle costs common with traditional GPU clusters where you often pay for always-on instances, leading to significant cost savings (up to 80-90%).

Q: Are there any hidden fees or setup costs? A: Inferless's pricing is transparent, based on per-second GPU usage and additional storage beyond the free 50GB. There are no explicit setup fees mentioned.

Q: Do you offer discounts for students/nonprofits/annual payments? A: Information on specific discounts for students or nonprofits is not publicly available. The pricing model is pay-as-you-go, so annual payment discounts are not applicable in the traditional sense, but large-scale enterprise use may involve custom agreements.

Features & Functionality

Q: Can Inferless integrate with common ML development platforms? A: Yes, Inferless supports importing models from popular platforms like Hugging Face, Git, Docker, AWS S3, and Google Cloud Buckets, allowing for seamless integration into existing ML workflows.

Q: What kind of models can I deploy on Inferless? A: You can deploy any custom machine learning model, as well as popular open-source models like Stable Diffusion, GPT-J, deepse, Gemini, and Mistral.

Q: Is my data secure with Inferless? A: Yes, Inferless emphasizes enterprise-level security. It is SOC 2 Type 2, ISO 27001, and GDPR compliant, undergoing penetration testing and regular vulnerability scans to protect your data.

Technical Questions

Q: What devices/browsers work with Inferless? A: Inferless is a web-based platform, so it works with modern web browsers on most devices. It also offers a Command-Line Interface (CLI) for those who prefer programmatic control. There are no dedicated desktop or mobile applications.

Q: Do I need to download anything to use Inferless? A: For UI-based deployment, you only need a web browser. For CLI deployment, you would need to install the Inferless CLI tool.

Q: What if I need help getting started? A: Inferless provides comprehensive documentation, quick start guides, video tutorials, and API documentation. General support is available via email, and higher-tier users receive dedicated support via private Slack connect. An AI assistant, Socai, is also integrated into the UI for instant help.

Final Verdict

Overall Score: 8.8/10

Recommended for:

- Machine learning engineers and data scientists needing a robust, scalable, and cost-efficient platform for deploying ML models.

- Startups and businesses with fluctuating or "spiky" inference workloads who want to minimize idle GPU costs.

- Organizations prioritizing low-latency inference and minimal cold start times for their AI applications.

- Companies that require strong security and compliance (SOC 2, ISO 27001, GDPR) for their ML infrastructure.

Not recommended for:

- Users who require absolute low-level control over their GPU infrastructure.

- Those strictly looking for fixed-price monthly plans without any usage-based billing component.

Bottom Line: Inferless is a powerful and highly efficient serverless GPU inference platform that excels at reducing cold start times and optimizing costs for ML model deployment. Its pay-as-you-go model and robust feature set, backed by strong security compliance, make it an attractive solution for any team looking to scale their AI applications without the complexities and expenses of traditional infrastructure management. It’s particularly well-suited for dynamic, real-time inference needs.

Last Reviewed: September 9, 2025

Reviewer: Toolitor Analyst Have you used this tool? Share your experience in the comments below

This review is based on publicly available information and verified user feedback. Pricing and features may change - always check the official website for the most current information.